On August 30, 2010 I published a scientific paper in the peer-reviewed journal PLoS ONE entitled “Dynamics of Wind Setdown at Suez and the Eastern Nile Delta”. This post is a follow-up to my earlier post of August 31, which focused on Open Access. So – what happened?

Plenty! My employer issued a press release on September 21, 2010, which is our standard practice for research that we think will have popular interest. There was extensive media coverage during that week, including segments by ABC News, Fox 31 KDVR in Denver, National Public Radio, CNN.com, and the BBC. After working hard on this research for years as a graduate student, it was gratifying to receive the attention! The number of article views at PLoS ONE is now at 39,009. Our animation of Parting the Waters has been viewed 542,928 times on YouTube, and my verbal explanation there has been viewed 58,618 times.

What role did Open Access play in the publication of my research results?

1. Open Access is ideally suited to inter-disciplinary topics of popular interest.

Many scientific journals focus on a single area of science, and reject manuscripts that are judged to fall outside that discipline. Is my research: oceanography, meteorology, archaeology, history, coastal oceanography, biblical studies, numerical modeling, geology, or what? A limited access journal tends to exclude from its readership those scientists who do not subscribe to the journal, placing a barrier to experts outside the journal’s focus. That same barrier to readership also discourages interested amateurs who are willing to brave the paper’s scientific rigor and try to understand what it’s about. I recognized that the Exodus problem is highly inter-disciplinary, and this publication might spark great popular interest. I didn’t want to exclude anyone from reading the paper, even with a small download fee.

2. Open Access increases the number of article views.

PLoS ONE includes a number of useful metrics, including the number of article views. As noted in my previous post, I want lots of people to read my paper. Although I don’t have extensive metrics on article views between Open Access and limited access, my colleagues tell me that 39,009 views is a lot for a scientific paper! We think it would be hard to get those numbers with limited access. Unfortunately, I cannot re-run this publication experiment with a traditional journal and count up the views again.

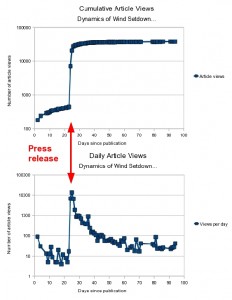

But I can plot the article views on a daily basis and try to extract some meaning out of the graphs! Here the cumulative and daily views on a log scale (see figure at right):

Obviously the press release and subsequent media coverage had a huge effect on article readership. Over two weeks the number of article views zoomed up from 500 to over 35,000! Since the graph doesn’t shoot up until the press release, people must be reading the news first and then looking up my scientific paper. It is safe to say that the media coverage caused a jump in article views, not the other way around. Media coverage drives people to my research; Open Access lets them in the front door. 35,000 people became interested enough to look at the original paper, and indeed they could (with some repeat visitors). Open Access works together with media publicity to increase drastically the societal impact of scientific research.

3. Open Access assists helpful amateurs to educate the general public.

In comments and blog posts I have noticed about 1 in every 20 posts is from a knowledgeable person who is trying to educate the rest of the folks on the forum. Often they have posted a link to the original paper, with the remark that it’s open access. These knowledgeable people are “amateurs”; and I use that term in the sense that they love science! They take time to educate themselves, they read technical articles, and they provide helpful references for everyone else. They look up facts instead of just typing in something and hitting the Post button. They verify the details and correct mistaken assumptions. Helpful amateurs are very important in communicating science. Professional scientists cannot do it alone. I can make the amateurs’ job easier by providing open access to my scientific publications. I appreciate their valuable efforts.

Open Access increases my impact as a scholar. Scientific research is hard work! The societal impact makes it all worthwhile.

Weatherwise magazine

On a related note, I have just published an article in Weatherwise magazine as a follow-up to the earlier scientific paper. This magazine article “Could Wind Have Parted the Red Sea?” explains the parting of the sea to the general public. So if you don’t want to read about drag coefficients and Mellor-Yamada mixing, that’s where to go!

UCAR Policy

The views expressed here are not necessarily those of my employer. UCAR has adopted an Open Access Policy, and has implemented that policy by the creation of an institutional repository of scholarly works known as OpenSky.